Many thanks to all of the amazing participants from around the globe who joined the UNC Institute on Implementation Science virtual session on November 17th. The webinar included:

- a live demonstration of Scotland’s digital resource, Early Intervention Framework for Children and Young People’s Mental Health and Mental Wellbeing, designed to support service providers in making fully informed decisions about interventions that are implementable and sustainable for their specific context, and

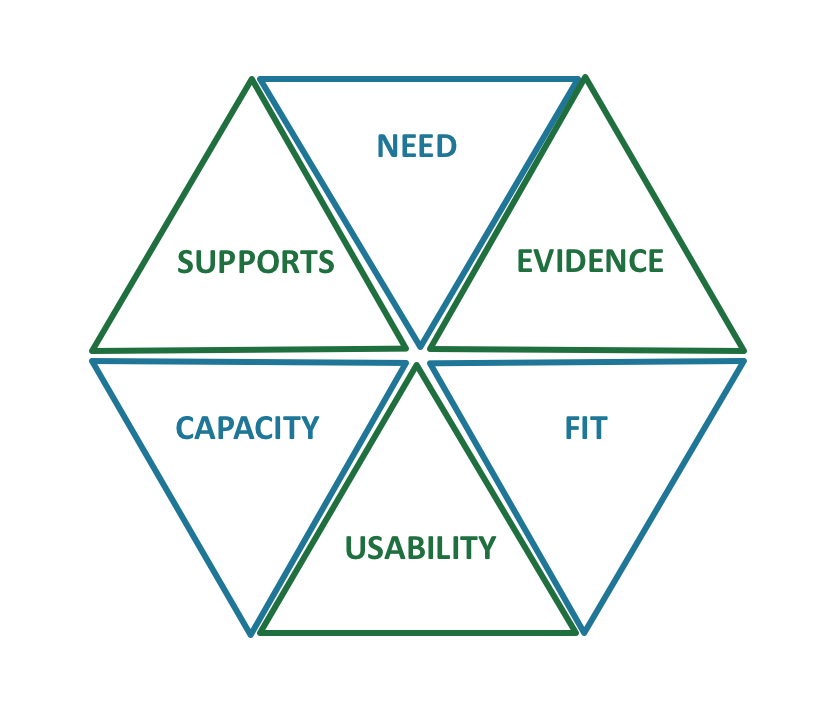

- a discussion of the newly revised Hexagon Tool and Discussion Guide, an assessment of contextual fit and feasibility developed by the National Implementation Research Network and how it can be used to integrate race equity considerations.

If you missed our event, check out the recorded webinar and resources here.

While we were able to respond to some questions and comments during the session, we didn’t have time for them all! We are grateful for participants’ engagement and thought-provoking feedback. To honor the conversation, we are posting the full set of questions with answers below. If you have further feedback on the newly revised Hexagon tool, please reach out to Allison Metz (allison.metz@unc.edu) or Laura Louison (louison@email.unc.edu).

Scotland’s Digital Resource Presentation

I would like to suggest a reflection on the use of the word "intervention" when talking about work with families. I use the word "enrichment"--it's strengths-based and reflects the orientation of the work I do.

Your suggested word ‘enrichment’ is a great one and aligns very strongly with the empowering and strengths-based approach that we see as underpinning any work with children and families.

Can you describe how "deprivation" is calculated? Is there a composite score approach?

We use a measure, called the Scottish Index of Multiple Deprivation (SIMD), which is the Scottish Government's standard approach to identify areas of multiple deprivation in Scotland. SIMD is a relative measure of deprivation across almost 7,000 small geographic areas (called data zones). SIMD looks at the extent to which an area is deprived across seven domains: income, employment, education, health, access to services, crime and housing. We use SIMD Quintiles, which split the datazones into 5 groups, each containing 20% of Scotland’s datazones. SIMD is an area-based measure of relative deprivation: not every person in a highly deprived area will themselves be experiencing high levels of deprivation. NHS Education for Scotland are not involved in calculating the deprivation of any area; however, we utilise the Scottish Government data that is available for every community in Scotland.

You mentioned that you adapted the Hexagon Tool for your program. Could you please tell us a bit more about that?

With permission from NIRN, we adapted the 2018 version of the Hexagon Tool in several ways. Firstly, we changed the order of the six indicators so that they reflected the order in which we wanted services to examine programmes (within the Programme Indicators, looking first at Usability and Supports before looking at Evidence, and then for the Implementing Site Indicators, working through Fit, Capacity and Need). We did not want evidence to be the first dimension that users looked at, as we did not want users to default to making decisions purely guided by evidence alone. We also made some minor changes to wording in the questions so that it was more accessible to a Scottish population. For every programme featured in the Early Intervention Framework, the wording for the Implementing Site Indicator questions have been individualised specifically for each programme. Furthermore, we made some alterations to the scoring for the Evidence indicator, allowing scores of 1, 1+, 2, 2+, 3, 3+, 4, 4+ and 5 (rather than just 1-5). We felt that we needed additional levels of discrimination within this scoring, as we would need to be able to discriminate between over 100 programmes. Finally, we altered some of the graphics to fit in with the general design of the Early Intervention Framework.

Have you worked with the model developers to complete the information?

We have been enormously fortunate to have been able to work with almost all programme developers in producing the information for each intervention included in the Early Intervention Framework. In most cases, this has included having an interview with the programme developers. They have also been generous in sharing resources and materials from the programmes themselves to help us with this process.

How far did you feel you were able to address the key implementation dimensions - given that they are often not well covered in published evidence about programmes. How did you cover the ground and what weren’t you able to cover so well?

Through working with the programme developers themselves, we have been able to describe all six dimensions of the Hexagon. This has included programme developers sharing materials and resources to allow us to better understand and represent the programmes in the summaries as well as, in most cases, also having an interview with the programme developers themselves, where they were able to fill in detail that we could not gather from elsewhere. On the rare occasions that we were not able to connect with programme developers, we gathered as much information as we could from the research published on the intervention, and from any other resources that we were able to find through literature and web searches (such as programme websites, which often include details about things like training and materials needed to deliver the intervention).

How did you select the Hexagon tool? What other tools were considered? Essentially: what was the decision-making process like?

We had a long history of working with Allison Metz and the team at NIRN, in relation to supporting the implementation of other strands of work that we were delivering. We were familiar with NIRN’s implementation frameworks and resources and saw that as a starting point for how we integrated implementation considerations into the resource. We wanted to move beyond just providing information on an intervention’s evidence and wanted to include detailed information about the intervention itself (including core components), information about the implementation supports that would be available from the programme developers, as well as the supports that services would need in order to be able to implement successfully. What was essential was that we included context. One of the aims of the resource was that it would enable decision making that was informed by the local context, and so we needed a strong contextual fit element to the resource. Allison is part of our Executive Team that has been leading on this development, and she suggested that we look at the Hexagon Tool, to see whether it could possibly form the architecture for the Framework. When we looked at the range of indicators captured in the Hexagon Tool, it aligned so well with the vision that we had been trying to achieve that we chose to adopt it for our resource.

My understanding is that the key purpose for the rating on the Hexagon components is to aid and guide decision-making. Do you or the program leaders use the rating for accountability purpose of the program? Why and why not?

We see the ratings of the indicators as a way to aid and guide decision making, however, not only when choosing a new intervention to implement. We think that the ratings can be used as part of a review process to see how the implementation of the intervention is progressing, whether it is still being rated in a similar way, and whether it is still aligning strongly with the contextual need and fit of the local community. We have also used the ratings as part of a quality assurance process, to demonstrate the decision making process that has been undertaken when prioritizing and justifying investment decisions.

What if the program is new and was developed by a more community-based group that didn’t have the same resources as larger models? Is that why they didn’t lead with evidence?

New programmes that have not had the benefit of being able to be evaluated or examined through research are still able to be included in the Early Intervention Framework. With respect to evidence, an intervention only needs to be able to demonstrate that it is guided by a well developed theory of change or logic model (including clear inclusion and exclusion criteria for the target population) for it to be potentially included in the resource. We have a number of interventions included in the Early Intervention Framework that have not been examined through any evaluation or research. We did not want to set the threshold for evidence to be so high that such locally developed interventions could not be included.

The Hexagon Tool & Discussion Guide

Did you think about how smaller more community-based orgs (that may be BIPOC run and serve the most disadvantaged populations) may be over- burdened in rigorously documenting outcomes? Or how meaningful in-depth community work with small sample sizes may be seen as less scalable?

We hope that the revised Hexagon better honors the creation of community-driven evidence by including questions about evaluation data, practice based evidence and theories of change in the Evidence indicator. By including these new questions in the evidence rating, it is possible for users to better evaluate evidence of programs or practices that were not created in academic settings. However, this does not address the challenge of under resourced and undervalued community organizations who do not have the time or capacity to document, evaluate and market their work. We also encourage users of the Hexagon to assess all six areas when selecting an intervention. The Hexagon Tool values research evidence, but does not privilege research evidence over the other five areas of the Hexagon, which should be given equal consideration when making decisions.

Is there a link for the stakeholder guide?

Yes! You can find it on our event page here. Many thanks to the amazing work of Lift Every Voice and the Collective Impact Forum for making this available.

As user of the Hexagon tool for 3 years I appreciate the changes; while the hexagon tool attempts to address culturally responsive and inclusive curriculum through FIT, is there an instrument/set of questions to parse this out even further?

The Hexagon’s Supports indicator includes two related questions:

- Are there curricula and/or other resources related to the program or practice readily available? If so, list publisher or links. Are the materials representative of the focus population who will be receiving and delivering the program or practice? What is the cost of these materials?

- Is training and professional development related to the program or practice readily available? Is training culturally sensitive? Does the training use adult learning best practices? Does it address issues of race equity, cultural responsiveness or implicit bias? Include the source of training and professional development. What is the cost of this training?

Your team may identify other important considerations for culturally responsive and inclusive curricula, such as language, reading level or how different points of view are represented. We are not aware of an assessment tool specifically addressing this topic that can be used in human services programs but know such tools have been developed for education settings, like the “Culturally Responsive Scorecard.”

I think we should also be considering system level changes vs programmatic changes— are we focusing on the fish or the stream?

The Hexagon is primarily focused at the organization level and considers organizational change factors. While the Fit indicator does identify potential intersections with other initiatives, community values and priorities of the implementing site, it does not consider all systems level changes needed. This is a critical factor when we think about programs that may not align with larger systemic issues, like state or federal policies or legislative and funding priorities, which would require systems-level changes for the program to be successfully implemented. Thank you for raising this!

Can you compare this tool to the ORCA? I love the call out to equity.

We have not used the ORCA tool in our work at NIRN, but it does assess evidence, context and facilitation. The ORCA is primarily a research tool, while the Hexagon is a tool used to promote discussion and decision making for an implementation team.