“If you can’t measure it, you can’t improve it.” Such a simple quote by Peter Drucker, but at the same time powerful and so very true. I recently received personal nutrition coaching and dreaded the weekly stepping on the scale and monthly measurements, but I realized quickly that without these I wouldn’t have known if changes I was making were working or not. I also had to enter data on my adherence to the macronutrient percentages daily, pictures of my weekly meal prep, sharing of grocery shopping lists, and documented use of my coping strategies when on the road for work travel to help me problem solve when the scale or measurements weren’t moving or were going in the wrong direction. Yes, it was intense but well worth it!

What do you measure in your implementation work to guide improvement efforts?

To put Drucker’s quote into action and measure what is important, we have learned to assess an organization’s implementation capacity, which is the organization’s ability to use implementation methods and techniques to adopt, use, and sustain evidence-based practices or programs. Implementation teams and leadership can use the capacity assessment data to identify strengths and areas for planning related to leadership and teaming structures, systems for developing and improving competency supports (e.g., selection, training, coaching, fidelity), and organization strategies for analyzing, communicating, and using data for continuous improvement. As an implementation coach, I can use the capacity assessment data to guide where to intensify my supports and the data provide feedback on how well my supports are working.

In addition to measuring implementation capacity, we also find it critical to measure fidelity in our implementation work. These two types of data, capacity and fidelity, are not the same, though people often confuse them or use the terms interchangeably. Fidelity measures assess the degree to which something is being implemented as intended. Fidelity data answers questions such as: Are those implementing the evidence-based practice adhering to the core components of the practice? Is the practice being implemented with the frequency and duration (i.e., dosage) intended? Is the practice’s implementation of high quality?

Fidelity data have been more commonly used, however fidelity measures that are specific to a given evidence-based practice lack generality for use across practices or programs. Just because an organization implements one practice with fidelity, doesn’t mean they will automatically be able to implement another practice with fidelity. Development of a strong infrastructure is necessary for selecting, supporting implementation of, continuously improving, and sustaining evidence-based practices. This is where capacity assessments are key. The need for a measure that assesses core implementation components, that is generalizable across innovations, led the K-12 team at NIRN (i.e., the State Implementation and Scaling-up of Evidence Based Practices Center’s SISEP) to develop capacity assessments.

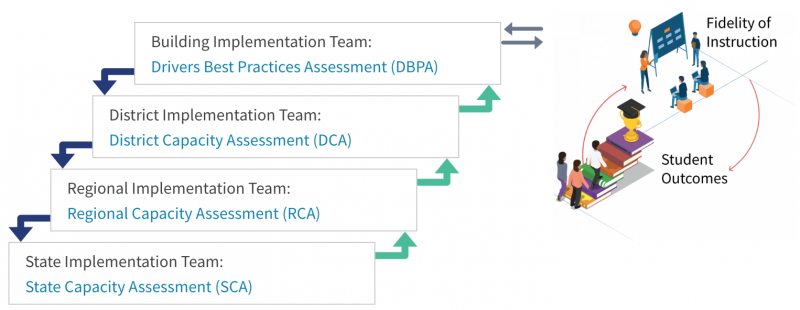

The SISEP team uses assessments to measure capacity across a linked teaming structure in education: State Capacity Assessment (SCA) at the state education agency level, Regional Capacity Assessment (RCA) at the regional or county level, District Capacity Assessment (DCA) at the district level, and the Drivers Best Practices Assessment (DBPA) at the school/building level.

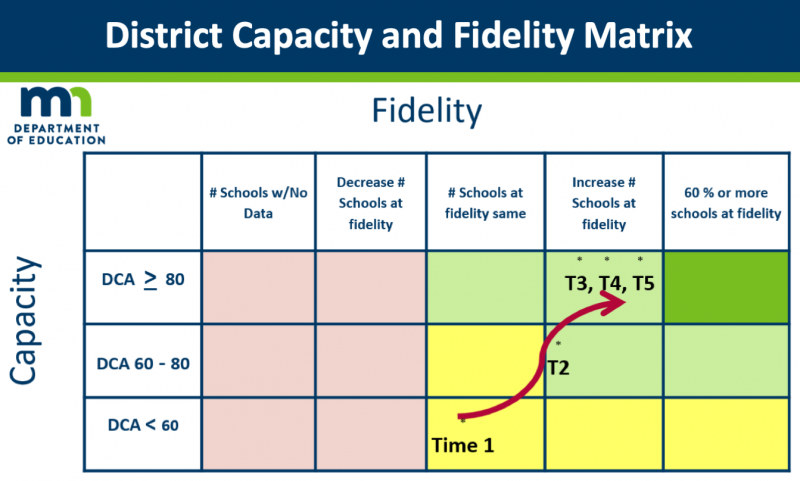

For example, the Minnesota Department of Education (MDE) examines the connections between their fidelity and capacity data. As shown in the matrix below, this district saw an increase in capacity data over time as well as an increase in the number of schools implementing with fidelity.

Though no causation can be drawn, these data suggest using capacity assessments to guide building and refining of the infrastructure to support those implementing, along with fidelity data to inform the effectiveness of that support for implementers, may increase the likelihood that the practices are implemented with fidelity. Additionally, implementation with fidelity may be more likely to be sustained over time when the infrastructure is solidly in place.

Measuring fidelity without measuring capacity doesn’t ensure you will have the systems to sustain well implemented (high fidelity) work; measuring capacity without measuring fidelity indicates potential for effective implementation, but doesn’t inform the delivery of practice to impact student outcomes. They interact with each other in different ways depending on where, in this case, a district is on their implementation journey.

-E. Kloos, Minnesota Department of Education

Interested in learning how to administer a capacity assessment?

Check out our new online, interactive Capacity Assessment Administration Course in which you can learn more about capacity assessments! The course provides an overview of all of our capacity assessments and instructions on the administration process. Through the use of interactive scenarios, participants have opportunities to practice the administration process.

A special thank you to the Minnesota Department of Education for sharing their learning and experience in measuring implementation capacity and fidelity.